What are function tool calls in Open AI?

A guide to understand function tool calls in Open AI.

Function tool calls. Have you heard this term a lot?

Let’s try to break down what exactly it is, when to use them, and how to use them.

1/ Function tool calls are an interface to call regular functions in your codebase, run those functions, get a response from them, and feed it back to your LLM. Put another way, it gives the AI the choice to invoke predefined backend logic/functions and execute them. After execution, those results can be used by the LLM to give even better results.

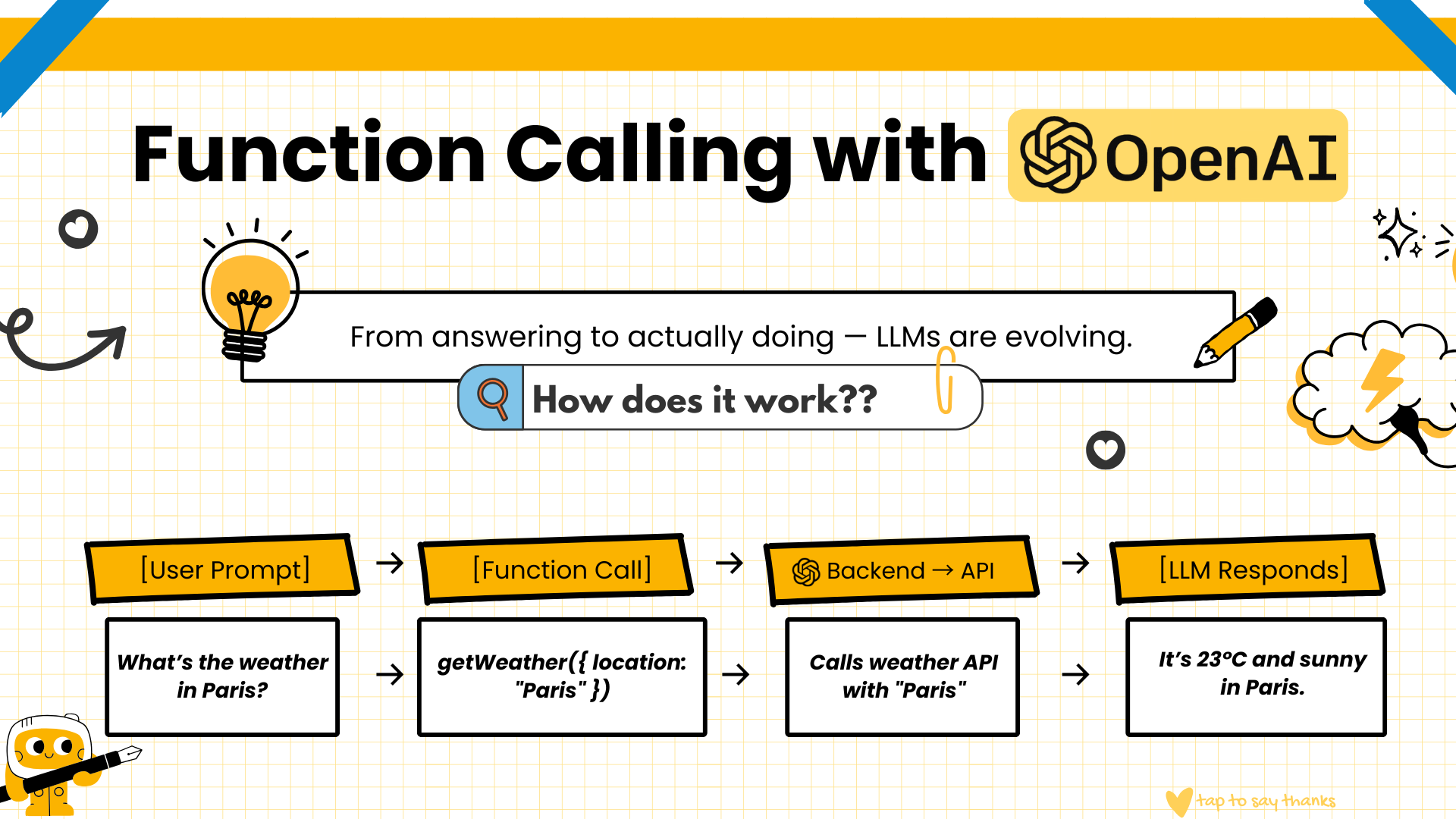

2/ How does it work behind the scenes?

- User enters a prompt, along with a list of functions available at the LLM’s disposal

- LLM sees the intent and chooses a matching function from the list you provided

- It returns a special call, called a function tool call, with the parameters needed to execute your function

- Your backend executes the function and gives the result back to the LLM

- The LLM uses the result to give an even better response

3/ Why is this different from regular LLM interaction?

So the LLM, with the help of function tool calls, not only responds within the knowledge of its cutoff date, but can also take action along with your system’s API and give better results.

With the help of function tool calls, your LLM interactions not only contain information, but can also take action.

4/ Where can function calling be used?

Here are a few examples:

- Send an email after a successful customer interaction

- Send a summary of interaction after a therapy session

- Upload a report to S3 after an analysis conversation

The above are not just answers, but answers with actions taken.

5/ Where should you avoid using function calling?

Here are a few rules of thumb to follow and decide:

- The use case doesn’t involve real-time data or actions

- You only need generative answers

- No backend is present to execute the functions

6/ What do you need to define to use function tool calls?

Before you can call the LLM, you must define the following:

- Function names

- Expected inputs

- Descriptions that help the LLM understand when to use them

7/ How much effort does it take to set up this process?

- It needs some setup, since we need to define the functions and maintain business logic

- It needs a backend to execute the functions

Hopefully, the above points helped you understand function tool calls. Again, it’s a bit more extensive than what I have written and could have covered in the thread. However, these function tool calls are the stepping stone to understanding MCPs. Hope this was helpful, guys!